AI Workloads on the Cloud: Building High-Throughput, Low-Latency Data Pipelines

“In today’s data-driven landscape, the efficacy of AI and machine learning initiatives is inextricably linked to the performance of underlying data pipelines. Organizations face mounting challenges in processing unprecedented data volumes efficiently while minimizing latency, a critical factor for time-sensitive applications. Cloud platforms have emerged as the definitive solution, offering robust, scalable toolsets that enable high-throughput, low-latency data operations essential for AI workloads.”

The success of modern AI initiatives is directly proportional to the efficiency of their underlying data pipelines. Inefficient pipelines create bottlenecks that severely limit an organization’s ability to extract value from AI investments. These constraints manifest as prolonged model training cycles, delayed insights, and the inability to operationalize AI at scale, effectively neutralizing potential competitive advantages.

High-throughput and low-latency represent distinct but complementary performance characteristics in AI pipeline architecture. Throughput refers to the system’s capacity to process large volumes of data in each timeframe, critical for training complex models on massive datasets. Latency, conversely, measures the time delay between data ingestion and availability for processing or serving, essential for real-time applications like fraud detection, dynamic pricing algorithms, and autonomous systems.

The business implications of well-architected AI pipelines extend far beyond technical performance metrics. Organizations with optimized pipelines enjoy accelerated time-to-market for AI-powered features, enhanced customer experiences through personalized, real-time interactions, and improved operational efficiency via predictive maintenance and intelligent automation. Furthermore, these capabilities often unlock entirely new revenue streams and business models previously unattainable with traditional data processing approaches.

Cloud environments have become the de facto standard for building these sophisticated pipelines due to their inherent elasticity, rich service ecosystems, and consumption-based cost models. However, merely migrating AI workloads to the cloud without strategic architectural considerations rarely delivers optimal results.

The Core Challenge: Balancing Volume, Velocity, and Veracity

- 1. Data Volume Challenges

The exponential growth in data generation presents formidable challenges for AI pipeline architects. Organizations now routinely process petabytes of structured, semi-structured, and unstructured data from diverse sources, from traditional databases and log files to video streams, IoT sensor readings, and social media feeds. This diversity necessitates sophisticated ingestion mechanisms capable of accommodating heterogeneous formats while maintaining performance integrity.

Cloud storage solutions like Amazon S3, Azure Data Lake Storage, and Google Cloud Storage provide the foundation for optimizing AI training throughput. These platforms offer virtually limitless scalability with multiple storage tiers that balance accessibility and cost-efficiency. However, simply leveraging cloud storage is insufficient; architects must implement careful data partitioning schemes, intelligent compression strategies, and optimized file formats (Parquet, ORC, Avro) to maximize read/write performance. Additionally, techniques like data lake architectures with query acceleration layers (e.g., Amazon Athena, Google BigQuery) enable efficient exploratory analysis without moving massive datasets.

- 2. Data Velocity Requirements

The velocity dimension introduces the critical distinction between batch and streaming processing paradigms, each with unique characteristics suited to specific AI workload profiles. Traditional batch processing remains essential for large-scale model training, where completeness takes precedence over immediacy. However, an expanding category of AI applications demands real-time or near-real-time data processing, including:

- ● Financial services applications requiring instant fraud detection

- ● E-commerce platforms implementing dynamic pricing algorithms

- ● Manufacturing environments utilizing predictive maintenance

- ● Healthcare systems monitoring patient vitals for immediate intervention

- ● Customer experience platforms delivering real-time personalization

Meeting these velocity requirements necessitates fundamentally different architectural approaches, with streaming-first designs leveraging technologies like Apache Kafka, Amazon Kinesis, or Google Pub/Sub as high-throughput message backbones.

- 3. Data Transformation Complexity

The transformation layer introduces additional complexity that significantly impacts pipeline performance. Raw data rarely arrives in formats directly suitable for AI model consumption, requiring extensive preprocessing through:

- ● Data cleaning operations to handle missing values, outliers, and inconsistencies

- ● Normalization and standardization to align scales and distributions

- ● Feature engineering to derive predictive variables

- ● Dimensionality reduction to improve computational efficiency

- ● Aggregation to create time-series features or entity-centric views

Each transformation step introduces potential latency and throughput constraints. Low latency data preprocessing techniques for AI inference often leverage techniques like feature stores (discussed later) to pre-compute and cache derived features, minimizing transformation overhead during real-time inference.

- 4. Infrastructure Bottlenecks

Even well-designed pipelines face infrastructure constraints that limit overall performance. Common bottlenecks include:

- ● Network bandwidth limitations when transferring large datasets

- ● I/O constraints in storage subsystems during high-concurrency operations

- ● Compute resource saturation during complex transformation operations

- ● Orchestration overhead for large-scale, distributed processing

- ● Memory constraints when processing high-dimensional feature spaces

Traditional on-premises environments struggle with these limitations due to fixed capacity limits and the inability to scale elastically in response to variable workloads. Cloud environments mitigate these constraints through auto-scaling capabilities, specialized compute options (GPU/TPU instances), and purpose-built services for each pipeline stage, provided architects implement appropriate design patterns to leverage these advantages.

Architectural Strategies for High Performance

- 1. Decoupled, Component-Based Design

Modern AI data pipelines benefit significantly from adopting microservices-inspired architectural principles. By decomposing pipelines into discrete, loosely coupled components with well-defined interfaces, organizations can achieve greater flexibility, resilience, and performance optimization. This approach parallels the evolution observed in software development, where monolithic applications have given way to containerized microservices.

The core pipeline stages are ingest, process, store, and serve, should operate as independent components, enabling:

- ● Independent scaling of individual stages based on unique resource requirements

- ● Targeted optimization of each component for its specific performance characteristics

- ● Improved fault isolation and resilience, preventing cascade failures

- ● Simplified maintenance and updates, allowing upgrades to specific components without disrupting the entire pipeline

Cloud providers now offer managed pipeline orchestration services that embrace this componentized approach:

- ● AWS SageMaker Pipelines provides containerized, reusable processing steps with automated parameter passing

- ● Google Vertex AI Pipelines leverages Kubeflow to orchestrate container-based components

- ● Azure ML Pipelines emphasizes reusable components with dependency management

- ● Kubeflow Pipelines offers a platform-agnostic approach using Kubernetes as the execution environment

These orchestration platforms manage directed acyclic graphs (DAGs) of operations, handling dependency resolution, resource allocation, and state management across distributed components.

- 2. Parallel Processing and Distribution

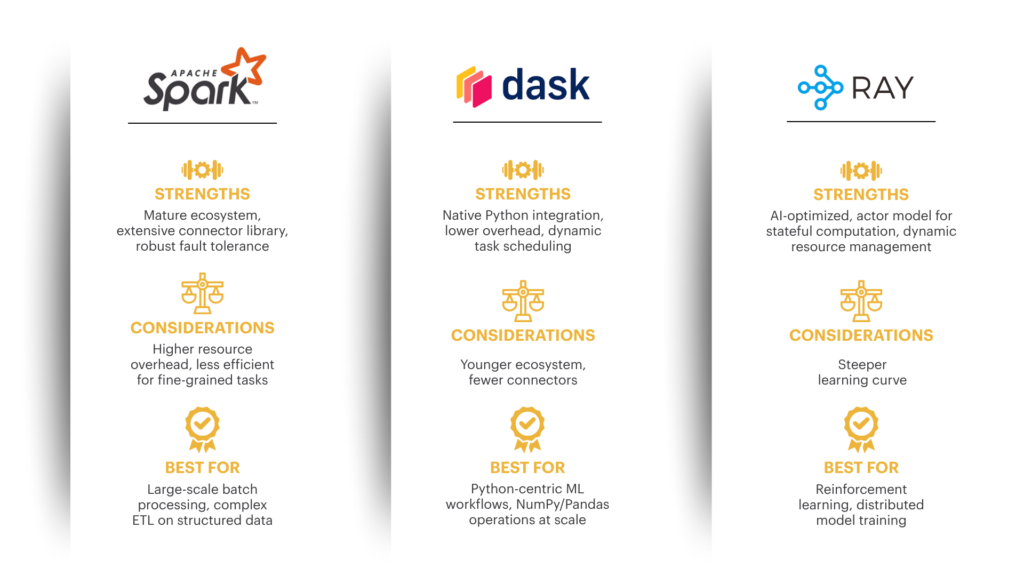

Effective parallelization represents a cornerstone strategy for achieving high-throughput AI data pipelines. Cloud environments provide multiple options for distributed data processing, with frameworks like Apache Spark and Dask emerging as predominant solutions.

When comparing distributed data processing frameworks for AI workloads, several factors merit consideration:

Cloud platforms further enhance parallelization through:

- ● Serverless ETL patterns for AI data pipelines (AWS Glue, Google Cloud Dataflow, Azure Data Factory) that automatically manage scaling

- ● Infrastructure elasticity through auto-scaling compute clusters (EMR, Dataproc, HDInsight)

- ● Specialized hardware acceleration via GPU and TPU integration

The choice between GPU and TPU for specific AI data pipelines requires careful analysis. GPUs excel at general-purpose parallel computing with broad framework support, making them versatile for diverse workloads. TPUs offer superior performance and cost-efficiency for specific deep learning tasks, particularly those using TensorFlow, but with more limited application scope. The decision should balance workload characteristics, framework preferences, and cost considerations.

- 3. Caching and Optimization

Strategic caching represents one of the most effective optimization techniques in high-performance AI pipelines. Feature stores have emerged as a specialized caching layer, serving as the interface between raw data processing and model training/inference. When effectively implemented, managing feature stores for high-throughput AI models delivers several advantages:

- ● Elimination of redundant computation for frequently used features

- ● Consistency across training and inference environments

- ● Decreased latency for real-time inference through pre-computation

- ● Feature sharing across multiple models and teams

- ● Version control and lineage tracking for compliance and reproducibility

Major cloud platforms now offer managed feature store solutions, including AWS SageMaker Feature Store, Vertex AI Feature Store, and Azure Feature Store (Preview), each providing optimization for their respective environments.

Beyond feature stores, additional optimization strategies include:

- ● File format selection based on access patterns (columnar formats like Parquet for analytical queries, row-based formats for record-level access)

- ● Compression algorithm selection balancing CPU overhead against storage efficiency and throughput

- ● Data layout optimization through partitioning and indexing strategies

- ● Query optimization through materialized views and aggregation tables

- ● Computation pushdown to storage layer where applicable

- 4. Asynchronous Processing and Event-Driven Architectures

Event-driven architecture patterns dramatically improve both throughput and latency in AI pipelines by decoupling producers and consumers of data. This approach enables:

- ● Buffer management for workload spikes through message queues

- ● Parallel processing of independent data streams

- ● Backpressure mechanisms that prevent system saturation

- ● Reactive processing triggered by state changes or data availability

The network architecture for low-latency AI pipelines in event-driven systems typically incorporates:

- ● High-throughput message brokers (Kafka, Amazon MSK, Google Pub/Sub, Azure Event Hubs)

- ● Serverless compute triggers (Lambda, Cloud Functions, Azure Functions) for event processing

- ● Stream processing engines (Flink, Spark Streaming, Dataflow) for complex event processing

- ● Dead-letter queues and retry mechanisms for resilience

These architectural patterns collectively establish the foundation for high-performance AI data pipelines, but their effective implementation requires strategic selection from the growing ecosystem of cloud services.

Choosing the Right Cloud Tools and Services

The proliferation of cloud services for AI workloads presents both opportunity and complexity for technical leaders. Each major cloud provider offers a comprehensive ecosystem spanning the entire AI pipeline lifecycle, with varying strengths and optimization profiles.

- 1. AWS AI Pipeline Services

Amazon Web Services provides a mature, deeply integrated ecosystem centered around Amazon SageMaker as its ML platform cornerstone:

- ● Data Ingestion: Kinesis Data Streams/Firehose, MSK (Managed Kafka), IoT Core

- ● Processing: SageMaker Processing, EMR (Elastic MapReduce), Glue ETL

- ● Feature Management: SageMaker Feature Store

- ● Orchestration: SageMaker Pipelines, Step Functions, AWS Data Pipeline

- ● Storage: S3, FSx for Lustre (high-performance file system), DynamoDB

- ● Analytics: Redshift, Athena, EMR

- ● Inference: SageMaker Endpoints, Lambda

AWS strengths include comprehensive service coverage, mature ecosystem integration, and flexibility through both managed and self-managed options. The platform excels in enterprises with existing AWS investments and those requiring fine-grained control over infrastructure configuration.

- 2. Google Cloud AI Pipeline Services

Google Cloud Platform offers an AI-centric approach with Vertex AI as its unified platform:

- ● Data Ingestion: Pub/Sub, Dataflow, Cloud Data Fusion

- ● Processing: Dataflow, Dataproc, Cloud Functions

- ● Feature Management: Vertex AI Feature Store

- ● Orchestration: Vertex AI Pipelines, Cloud Composer (managed Airflow)

- ● Storage: Cloud Storage, BigTable, Cloud SQL

- ● Analytics: BigQuery, Dataproc

- ● Inference: Vertex AI Endpoints, Cloud Run

Google Cloud differentiates through its serverless data processing capabilities (particularly Dataflow), tight integration with popular open-source frameworks, and cohesive ML platform in Vertex AI. The platform particularly suits organizations with data science teams familiar with TensorFlow and those prioritizing managed services over infrastructure management.

- 3. Microsoft Azure AI Pipeline Services

Microsoft Azure provides enterprise-focused AI infrastructure with Azure Machine Learning as its central platform:

- ● Data Ingestion: Event Hubs, IoT Hub, Kafka on HDInsight

- ● Processing: Azure Databricks, Synapse Analytics, Azure Functions

- ● Feature Management: Azure Feature Store (Preview)

- ● Orchestration: Azure Machine Learning Pipelines, Data Factory

- ● Storage: Azure Data Lake Storage, Blob Storage, Azure SQL

- ● Analytics: Synapse Analytics, Azure Databricks

- ● Inference: Azure ML Endpoints, Azure Kubernetes Service

Azure’s strengths include seamless integration with enterprise Microsoft technologies, hybrid deployment capabilities, and accessible UI-driven development experiences. It presents a compelling option for organizations with significant investments in Microsoft’s ecosystem or those requiring robust hybrid-cloud capabilities.

- 4. Multi-Cloud and Platform-Agnostic Approaches

Organizations pursuing multi-cloud strategies often leverage platform-agnostic tools, particularly those built around Kubernetes:

- ● Kubeflow Pipelines: Open-source pipeline orchestration running on any Kubernetes cluster

- ● MLflow: Tracking, project packaging, and model registry functions regardless of underlying infrastructure

- ● Apache Airflow: Workflow orchestration deployable across environments

- ● Seldon Core: Model serving layer for Kubernetes environments

These approaches prioritize portability and vendor neutrality but typically require greater in-house operational expertise.

- 5. Selection Framework for Technical Leaders

When evaluating platforms for AI data pipelines, consider these criteria:

- ● Existing Cloud Footprint: Leverage existing expertise and negotiate volume-based discounts

- ● Workload Characteristics: Match platform strengths to specific requirements (batch vs. real-time)

- ● Team Skills: Align with existing technical competencies to accelerate adoption

- ● Integration Requirements: Consider connectivity to existing data sources and enterprise systems

- ● Governance Needs: Evaluate security, compliance, and monitoring capabilities

- ● Cost Structure: Analyze both direct costs and operational overhead across alternatives

- ● Lock-In Concerns: Balance platform-specific optimizations against portability requirements

A comprehensive data pipeline orchestration tools comparison for AI workloads should include evaluation across dimensions of performance, usability, monitoring capabilities, ecosystem integration, and total cost of ownership.

Best Practices for Implementation and Management

Successful implementation of high-performance AI data pipelines requires attention to operational excellence beyond initial architecture design. The following best practices ensure sustainable performance, reliability, and cost-effectiveness:

- 1. Comprehensive Monitoring and Observability

Implement multi-layer monitoring covering:

- ● Infrastructure Metrics: Compute utilization, memory consumption, network throughput

- ● Pipeline Performance: Component-level latency, throughput, queue depths, backpressure indicators

- ● Data Quality: Schema validation results, outlier detection, drift metrics

- ● Business Outcomes: Model performance, inference accuracy, business KPI impact

Leading organizations integrate these metrics into unified observability platforms with:

- ● Real-time dashboards for operational visibility

- ● Anomaly detection with machine learning-based alerting

- ● Historical analysis for capacity planning and trend identification

- ● Correlation analysis across pipeline stages to identify cascade effects

- 2. Infrastructure as Code (IaC)

Treat pipeline infrastructure as software through:

- ● Declarative definition of all infrastructure components using tools like Terraform, CloudFormation, or Pulumi

- ● Version control of infrastructure templates alongside application code

- ● Automated testing of infrastructure deployments

- ● Immutable infrastructure patterns rather than in-place modifications

- ● Parameterized environments enabling consistent deployment across development, testing, and production

This approach ensures reproducibility, facilitates disaster recovery, and enables consistent governance across environments.

- 3. Data Governance and Lineage

As AI systems increasingly impact critical decisions, robust governance becomes essential:

- ● Implement automated data quality validation at ingestion points

- ● Maintain comprehensive lineage tracking from source systems through transformations to model inputs

- ● Leverage cloud-native catalog services (AWS Glue Data Catalog, Google Data Catalog, Azure Purview)

- ● Implement appropriate access controls and encryption for sensitive data

- ● Enable automated compliance reporting for regulatory requirements

- 4. Cost Optimization Strategies

The elastic nature of cloud environments requires proactive cost management through:

- ● Resource right-sizing based on actual utilization patterns

- ● Strategic use of spot/preemptible instances for fault-tolerant workloads

- ● Storage tiering with automatic lifecycle policies

- ● Caching frequently accessed datasets to reduce reprocessing costs

- ● Reserved capacity purchases for predictable baseline workloads

Organizations implementing these cost optimization strategies for AI data pipelines in the cloud typically achieve 30-50% cost reductions compared to unoptimized deployments.

- 5. Continuous Integration/Continuous Deployment (CI/CD) for Pipelines

Apply software engineering best practices to pipeline development:

- ● Implement automated testing for individual pipeline components

- ● Deploy canary release patterns for pipeline updates

- ● Maintain staging environments mirroring production characteristics

- ● Automate validation of pipeline outputs against expected quality metrics

- ● Enable rapid rollback capabilities for failed deployments

These MLOps practices accelerate innovation while maintaining stability and reliability.

Conclusion

High-throughput, low-latency data pipelines represent the essential foundation upon which successful AI initiatives are built. As organizations increasingly depend on AI for competitive differentiation, the underlying data infrastructure becomes a critical strategic asset requiring thoughtful architecture, technology selection, and operational excellence.

Cloud platforms provide comprehensive toolsets to address the complex requirements of modern AI workloads, offering elasticity, specialized hardware acceleration, and managed services that dramatically reduce operational complexity. However, technology selection alone is insufficient, successful implementations require architectural patterns that balance throughput and latency requirements while maintaining cost-effectiveness and operational sustainability.

Organizations that excel in this domain share common characteristics: they approach pipelines as strategic assets rather than technical plumbing, they implement cloud-native architectures rather than lifting-and-shifting legacy approaches, and they continuously optimize based on evolving requirements and workload characteristics.

Motherson Technology Services helps enterprises navigate this complex landscape through our specialized AI infrastructure practice. Our approach combines deep technical expertise in cloud-native architectures with practical experience implementing high-performance pipelines across diverse industries. By partnering with Motherson, organizations can accelerate their AI initiatives through optimized data pipelines that deliver both the performance and reliability required for production-grade artificial intelligence systems.

References

[1] https://sagemaker.readthedocs.io/en/stable/workflows/pipelines/sagemaker.workflow.pipelines.html

[2] https://aws.amazon.com/sagemaker/pipelines/

[3] https://docs.azure.cn/en-us/machine-learning/how-to-create-component-pipelines-ui?view=azureml-api-2

[4] https://cloud.google.com/vertex-ai/docs/pipelines/introduction

[5] https://cloud.google.com/vertex-ai/docs

About the Author:

Dr. Bishan Chauhan

Head – Cloud Services & AI / ML Practice

Motherson Technology Services

With a versatile leadership background spanning over 25 years, Bishan has demonstrated strategic prowess by successfully delivering complex global software development and technology projects to strategic clients. Spearheading Motherson’s entire Cloud Business and global AI/ML initiatives, he leverages his Ph.D. in Computer Science & Engineering specializing in Machine Learning and Artificial Intelligence. Bishan’s extensive experience includes roles at Satyam Computer Services Ltd and HCL prior to his 21+ years of dedicated service to the Motherson Group.